Why PWA?

PWA means Progressive Web Apps. Back in 2014, the W3C published a draft of Service Worker, and then in 2015, Chrome supported it in the production environment. If we take the emergence of Service Worker, one of PWA's core technologies, as the start point of PWA, PWA's birth year is 2015. Before focusing on what a PWA is, let's first understand why we need it.

-

User experience. Back to 2015, frontend developers spend a lot of time optimizing the web by speeding up the rendering of the initial page, making the animation smoother, etc. However, the native app still wins regarding the user experience.

-

User retention. Native apps can be pinned onto the mobile phone's home screen and bring the users back into the app by notifications, while the web apps cannot.

-

Leveraging device APIs. Android and iOS provide abundant device APIs that native apps can easily use with the user's permission. However, back then, the browser does not fully support them.

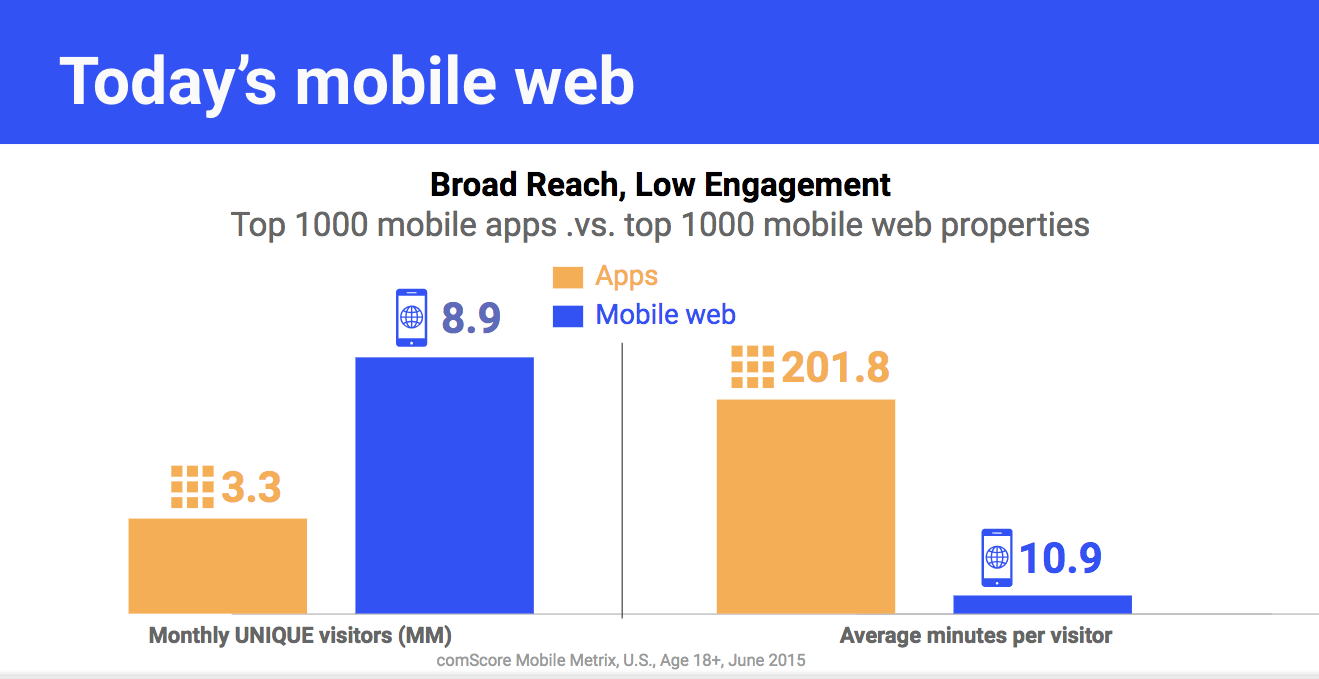

Google's tutorial of Why Build Progressive Web Apps summarizes the problem as "Broad Reach, Low Engagement".

To tackle the disadvantages of web apps in the mobile age, PWA comes into being.