Building the Software 'Gigafactory'

1. Outcome-Oriented: Autonomous Debugging

Deliver results, not processes.

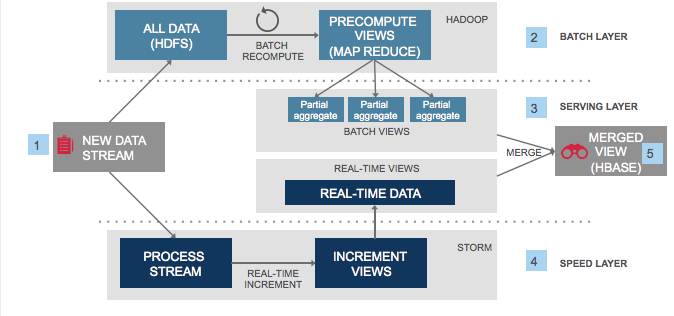

AI must possess a complete closed-loop capability, from vulnerability detection to self-healing. Whether it is invoking curl for diagnostics or parsing logs, the AI should resolve faults independently and generate test cases to verify its own correctness. Managers focus solely on the final output, never intervening in the intermediate logic.

2. Efficiency Metric: Token-Measured Productivity

Consumption equals output. Redefine productivity: the volume of tokens consumed per month is the sole hard metric for efficiency. Achieve exponential leaps in productivity by measuring the number of $200/mo subscriptions a single person can exhaust or the scale of Agent clusters they can simultaneously drive.

3. Drive Mode: Proactive Autonomy

Break the "Command-Response" loop. Top-tier AI systems should not wait for a human wake-up call. They must possess the capacity for autonomous observation, decision-making, and execution, continuously creating value during "vacuum periods" when no human supervision is present.

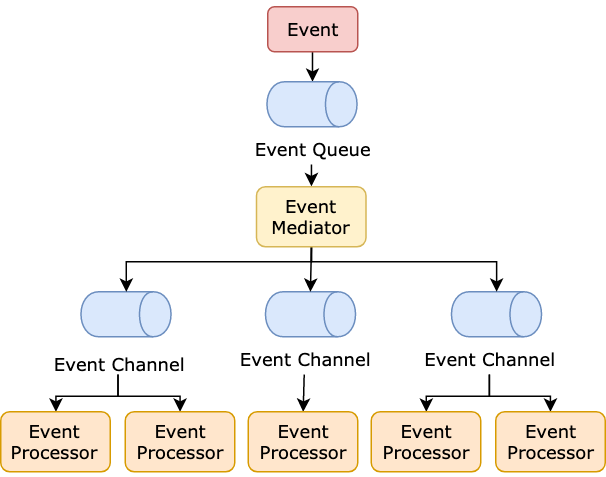

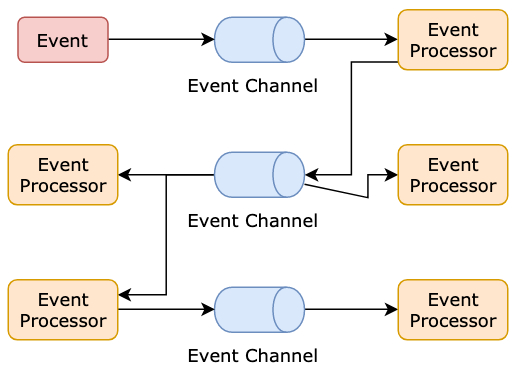

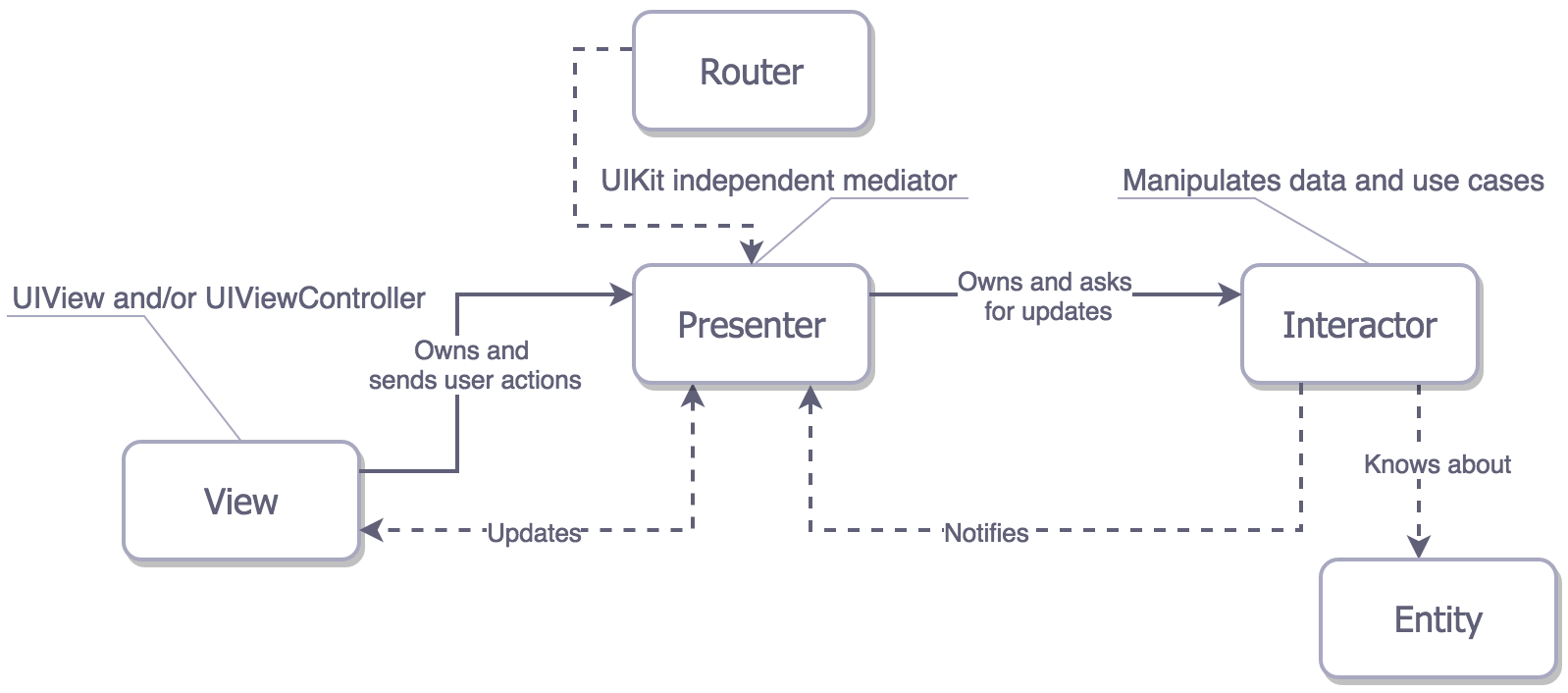

4. Fault-Tolerant Design: Order within Chaos (Resilient Architecture)

Constrain flexibility through architecture. Construct a "high-fault-tolerance" underlying architecture. Even if the AI "goes rogue" within local logic, it remains confined within the safety zones of a robust systemic framework. Good architecture grants the AI the freedom to fail without letting the entire system collapse.

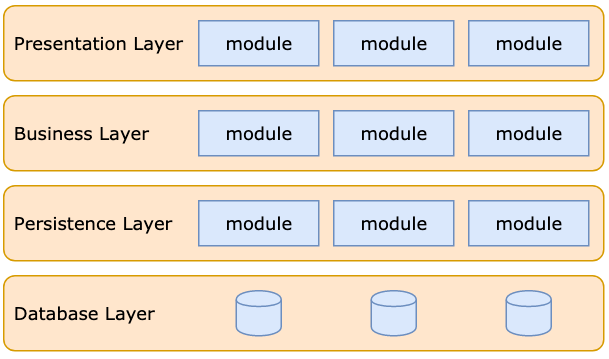

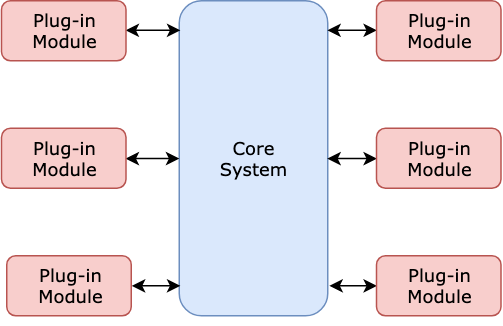

5. Asset Form: Modular Progress

Capabilities as assets. AI capabilities must be digitized, measurable, and evolvable. Through modular design, ensure every newly developed capability can be reused and combined like building blocks, forming an ever-accumulating competitive moat.

6. Boundary Expansion: Omni-Agent Factory

Limitless substitution. Squeeze every drop of potential out of AI—from code writing and video production to automated social media management. The goal is to transform the company into a highly automated "Gigafactory," where humans serve as the Chief Architects.

7. Evolutionary Logic: Invent and Simplify

Working backwards; breakthrough via brute force. Do not pay the tax of over-engineering. First, invent the product that serves the customer using the most direct (even "clunky") methods. Once the business loop is validated, utilize technical refinement to simplify and reconstruct.